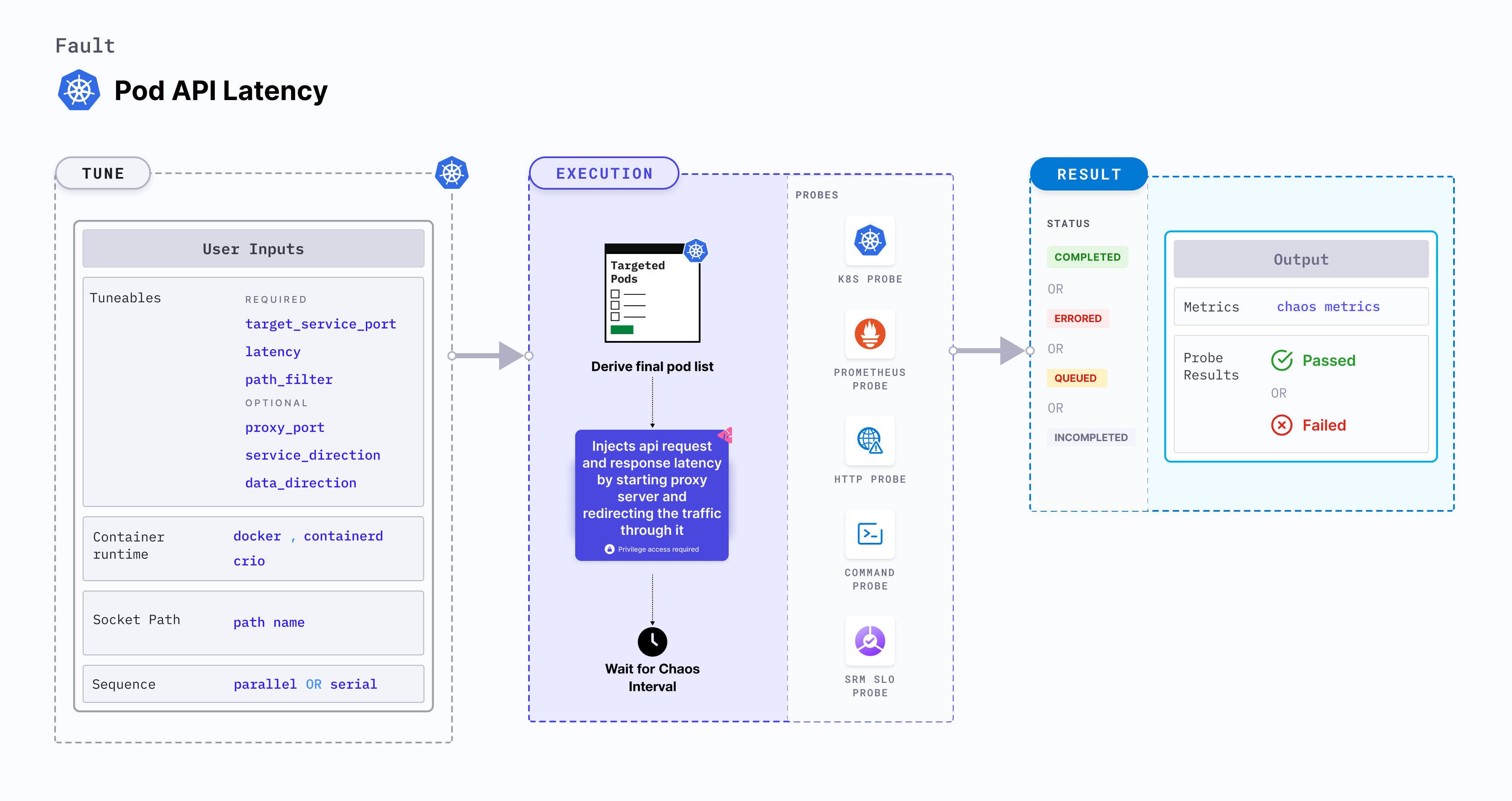

Pod API latency

Pod API latency is a Kubernetes pod-level chaos fault that injects API request and response latency by starting proxy server and redirecting the traffic through it.

A transient proxy (also known as helper pod) is brought up for a chaos duration to intercept the requests made from the target application to another service and elsewhere, and inject chaos accordingly. Target application refers to the application pods calling/sending egress traffic to an endpoint.

This video provides a step-by-step walkthrough of the execution process for the Pod API Latency experiment.

Use cases

Pod API latency:

- Simulate high traffic scenarios and testing the resilience and performance of an application or API, where the API may experience delays due to heavy load.

- Simulate situations where an API request takes longer than expected to respond. By introducing latency, you can test how well your application handles timeouts and implements appropriate error handling mechanisms.

- It can be used to test, how well the application handles network delays and failures, and if it recovers gracefully when network connectivity is restored.

Permissions required

Below is a sample Kubernetes role that defines the permissions required to execute the fault.

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: hce

name: pod-api-latency

spec:

definition:

scope: Cluster # Supports "Namespaced" mode too

permissions:

- apiGroups: [""]

resources: ["pods"]

verbs: ["create", "delete", "get", "list", "patch", "deletecollection", "update"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "get", "list", "patch", "update"]

- apiGroups: [""]

resources: ["pods/log"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["deployments, statefulsets"]

verbs: ["get", "list"]

- apiGroups: [""]

resources: ["replicasets, daemonsets"]

verbs: ["get", "list"]

- apiGroups: [""]

resources: ["chaosEngines", "chaosExperiments", "chaosResults"]

verbs: ["create", "delete", "get", "list", "patch", "update"]

- apiGroups: ["batch"]

resources: ["jobs"]

verbs: ["create", "delete", "get", "list", "deletecollection"]

Prerequisites

- Kubernetes > 1.16

- The application pods should be in the running state before and after injecting chaos.

Mandatory tunables

| Tunable | Description | Notes |

|---|---|---|

| TARGET_CONTAINER | Name of the container subject to API latency. | None. For more information, go to target specific container |

| NODE_LABEL | Node label used to filter the target node if TARGET_NODE environment variable is not set. | It is mutually exclusive with the TARGET_NODE environment variable. If both are provided, the fault uses TARGET_NODE. For more information, go to node label. |

| TARGET_SERVICE_PORT | Port of the target service. | Default: port 80. For more information, go to target service port |

| LATENCY | Delay added to the API requests and responses. | It supports ms, s, m, h units, Default: 2s. For more information, go to latency |

Optional tunables

| Tunable | Description | Notes |

|---|---|---|

| PATH_FILTER | API path or route used for the filtering. | Targets all paths if not provided. For more information, go to path filter . |

| TRANSACTION_PERCENTAGE | Percentage of the API requests to be affected. | It supports values in range (0,100]. It targets all requests if not provided. For more information, go to transaction percentage . |

| HEADERS_FILTERS | Filters for HTTP request headers accept multiple comma-separated headers in the format key1:value1,key2:value2. | For more information, go to header filters. |

| METHODS | The HTTP request method type accepts comma-separated HTTP methods in upper cases, such as "GET,POST". | For more information, go to methods. |

| QUERY_PARAMS | HTTP request query parameter filters accept multiple comma-separated query parameters in the format of param1:value1,param2:value2. | For more information, go to query params. |

| SOURCE_HOSTS | Includes comma-separated source host names as filters, indicating the origin of the HTTP request. This is specifically relevant to the "ingress" type. | For more information, go to source hosts. |

| SOURCE_IPS | This includes comma-separated source IPs as filters, indicating the origin of the HTTP request. This is specifically relevant to the "ingress" type. | For more information, go to source ips. |

| DESTINATION_HOSTS | Comma-separated destination host names are used as filters, indicating the hosts on which you call the API. This specification applies exclusively to the "egress" type. | For more information, go to destination hosts. |

| DESTINATION_IPS | Comma-separated destination IPs are used as filters, indicating the hosts on which you call the API. This specification applies exclusively to the "egress" type. | For more information, go to destination hosts. |

| PROXY_PORT | Port where the proxy listens for requests. | Default: 20000. For more information, go to proxy port |

| LIB_IMAGE | Image used to inject chaos. | Default: harness/chaos-go-runner:main-latest. For more information, go to image used by the helper pod. |

| SERVICE_DIRECTION | Direction of the flow of control, ingress or egress | Default: ingress. For more information, go to service direction |

| DATA_DIRECTION | API payload type, request or response | Default: both. For more information, go to data direction |

| DESTINATION_PORTS | comma-separated list of the destination service or host ports for which egress traffic should be affected | Default: 80,443. For more information, go to destination ports |

| HTTPS_ENABLED | facilitate HTTPS support for both incoming and outgoing traffic | Default: false. For more information, go to https |

| CA_CERTIFICATES | These CA certificates are used by the proxy server to generate the server certificates for the TLS handshake between the target application and the proxy server. | These CA certificates must also be added to the target application's root certificate. For more information, go to CA certificates. |

| SERVER_CERTIFICATES | These server certificates are used by the proxy server for the TLS handshake between the target application and the proxy server. | The corresponding CA certificates should be loaded as root certificates inside the target application. For more information, go to server certificates. |

| CLIENT_CERTIFICATES | These client certificates are used by the proxy server for the MTLS handshake between the upstream server and the proxy server. | The corresponding CA certificates should be loaded as root certificates inside the upstream server. For more information, go to client certificates. |

| HTTPS_ROOT_CERT_PATH | Provide the root CA certificate directory path | This setting must be configured if the root CA certificate directory differs from /etc/ssl/certs. Please refer to https://go.dev/src/crypto/x509/root_linux.go to understand the default certificate directory based on various Linux distributions. For more information, go to https |

| HTTPS_ROOT_CERT_FILE_NAME | Provide the root CA certificate file name | This setting must be configured if the root CA certificate file name differs from ca-certificates.crt. Please refer to https://go.dev/src/crypto/x509/root_linux.go to understand the default certificate file names based on various Linux distributions. For more information, go to https |

| NETWORK_INTERFACE | Network interface used for the proxy. | Default: eth0. For more information, go to network interface |

| CONTAINER_RUNTIME | Container runtime interface for the cluster. | Default: containerd. Supports docker, containerd and crio. For more information, go to container runtime |

| SOCKET_PATH | Path to the containerd/crio/docker socket file. | Default: /run/containerd/containerd.sock. For more information, go to socket path |

| TOTAL_CHAOS_DURATION | Duration to inject chaos (in seconds). | Default: 60s. For more information, go to duration of the chaos |

| TARGET_PODS | Comma-separated list of application pod names subject to pod HTTP latency. | If not provided, the fault selects target pods randomly based on provided appLabels. For more information, go to target specific pods |

| PODS_AFFECTED_PERC | Percentage of total pods to target. Provide numeric values. | Default: 0 (corresponds to 1 replica). For more information, go to pod affected percentage |

| RAMP_TIME | Period to wait before and after injecting chaos (in seconds). | For example, 30 s. For more information, go to ramp time |

| SEQUENCE | Sequence of chaos execution for multiple target pods. | Default: parallel. Supports serial and parallel. For more information, go to sequence of chaos execution |

Target service port

Port of the target service. Tune it by using the TARGET_SERVICE_PORT environment variable.

The following YAML snippet illustrates the use of this environment variable:

## provide the port of the targeted service

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: engine-nginx

spec:

engineState: "active"

annotationCheck: "false"

appinfo:

appns: "default"

applabel: "app=nginx"

appkind: "deployment"

chaosServiceAccount: litmus-admin

experiments:

- name: pod-api-latency

spec:

components:

env:

# provide the port of the targeted service

- name: TARGET_SERVICE_PORT

value: "80"

- name: PATH_FILTER

value: '/status'

Latency

Delay added to the API request and response. Tune it by using the LATENCY environment variable.

The following YAML snippet illustrates the use of this environment variable:

## provide the latency value

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: engine-nginx

spec:

engineState: "active"

annotationCheck: "false"

appinfo:

appns: "default"

applabel: "app=nginx"

appkind: "deployment"

chaosServiceAccount: litmus-admin

experiments:

- name: pod-api-latency

spec:

components:

env:

# provide the latency value

- name: LATENCY

value: "2s"

# provide the port of the targeted service

- name: TARGET_SERVICE_PORT

value: "80"

- name: PATH_FILTER

value: '/status'

Path filter

API sub path (or route) to filter the API calls. Tune it by using the PATH_FILTER environment variable.

The following YAML snippet illustrates the use of this environment variable:

## provide api path filter

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: engine-nginx

spec:

engineState: "active"

annotationCheck: "false"

appinfo:

appns: "default"

applabel: "app=nginx"

appkind: "deployment"

chaosServiceAccount: litmus-admin

experiments:

- name: pod-api-latency

spec:

components:

env:

# provide the api path filter

- name: PATH_FILTER

value: '/status'

# provide the port of the targeted service

- name: TARGET_SERVICE_PORT

value: "80"

Destination ports

A comma-separated list of the destination service or host ports for which egress traffic should be affected as a result of chaos testing on the target application. Tune it by using the DESTINATION_PORTS environment variable.

It is applicable only for the egress SERVICE_DIRECTION.

The following YAML snippet illustrates the use of this environment variable:

## provide destination ports

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: engine-nginx

spec:

engineState: "active"

annotationCheck: "false"

appinfo:

appns: "default"

applabel: "app=nginx"

appkind: "deployment"

chaosServiceAccount: litmus-admin

experiments:

- name: pod-api-latency

spec:

components:

env:

# provide destination ports

- name: DESTINATION_PORTS

value: '80,443'

# provide the api path filter

- name: PATH_FILTER

value: '/status'

# provide the port of the targeted service

- name: TARGET_SERVICE_PORT

value: "80"

HTTPS

To enable HTTPS support for both incoming and outgoing traffic between the target and the proxy, set the HTTPS_ENABLED field to true. The next step is to configure TLS, for which you can follow one of the ways: Using self-signed certificate or using trusted certificate.

Certificate Configuration for TLS/MTLS

To establish secure communication between your target application and the chaos proxy server, you need to configure certificates based on your requirements:

- For TLS (one-way authentication): Configure server-side certificates using either Self-Signed or Trusted Certificates

- For MTLS (mutual authentication): Configure server-side certificates (Self-Signed or Trusted) + Client Certificates

Using Self-Signed Certificates

Choose one of the following approaches to configure server-side certificates for TLS:

-

CA Certificates (Dynamic Certificate Generation)

Use this approach when you want the proxy to dynamically generate server certificates during the TLS handshake. This is the most flexible option for handling multiple domains.

What you need:

- ca.key: The private key used to sign certificates.

- ca.crt: The Certificate Authority (CA) certificate.

Prerequisites:

- Load

ca.crtas a trusted root CA certificate in your target application before running the chaos experiment.

Configuration Steps:

-

Combine your CA key and certificate into a single PEM file:

cat ca.key ca.crt > ca.pem -

Base64-encode the combined file and add it to the

CA_CERTIFICATESenvironment variable:cat ca.pem | base64

How it works: The proxy dynamically generates server certificates with the appropriate domain names during each TLS handshake. These certificates are signed by the self-signed CA (provided in the

CA_CERTIFICATESenvironment variable) and then presented to the target application. -

Server Certificates (Pre-Generated Certificates)

Use this approach when you want to use pre-generated server certificates with specific domain names. This is ideal when you know all the domains your application will communicate with.

What you need:

- server.key: The private key for the server certificate.

- server.crt: The server certificate signed by your CA.

Understanding Domain Types:

- Internal Domains: Organization-managed services (e.g.,

api.mycompany.internal). These typically include internal microservices and soft dependencies. - External Domains: Third-party services (e.g.,

dynamodb.amazonaws.com,s3.amazonaws.com). These are usually hard dependencies. - SAN (Subject Alternative Names): A list of all domain names that the certificate should be valid for. These are specified when creating the Certificate Signing Request (CSR).

Prerequisites:

- Load

ca.crtas a trusted root CA certificate in your target application before running the chaos experiment. - Generate

server.crtwith all necessary domain names (using SAN) and sign it with your CA certificates.

Configuration Steps:

-

Combine your server key and certificate into a single PEM file:

cat server.key server.crt > server.pem -

Base64-encode the combined file and add it to the

SERVER_CERTIFICATESenvironment variable:cat server.pem | base64

How it works: The proxy uses the pre-generated server certificates (provided in the

SERVER_CERTIFICATESenvironment variable) during the TLS handshake with your target application.

About Intermediate Certificates: Intermediate certificates form a chain of trust between your server certificate and the root CA. They are essential for establishing trust when your certificate is signed by an intermediate CA rather than directly by a root CA. Include them in the certificate chain to ensure proper validation.

Using Trusted Certificates

When using certificates signed by a publicly trusted Certificate Authority (such as Let's Encrypt, DigiCert, or your organization's trusted CA), you don't need to manually load the CA certificate into your target application, as it's already trusted by default.

-

For Internal Domains Only

Use this when your chaos experiments only affect internal, organization-managed services.

Prerequisites:

- Generate server certificates that include all internal domain names (using SAN).

- Sign the certificates using a trusted CA.

- No need to load the CA certificate in the target application since it's already trusted.

Configuration Steps:

-

Combine your server key, certificate, and any intermediate certificates:

cat server.key server.crt intermediate.crt > server.pemNote: Replace

intermediate.crtwith your actual intermediate certificate file(s). If you have multiple intermediate certificates, include them all in order. -

Base64-encode the combined file and add it to the

SERVER_CERTIFICATESenvironment variable:cat server.pem | base64

-

For Both Internal and External Domains

Use this when your chaos experiments affect both internal services and external third-party services (e.g., AWS, Azure, GCP).

Prerequisites:

- Generate server certificates that include both internal and external domain names (using SAN).

- Sign the certificates using a trusted CA.

- No need to load the CA certificate in the target application since it's already trusted.

Configuration Steps:

-

Combine your server key, certificate, and any intermediate certificates:

cat server.key server.crt intermediate.crt > server.pemNote: Ensure your certificate's SAN includes all domains your application communicates with.

-

Base64-encode the combined file and add it to the

SERVER_CERTIFICATESenvironment variable:cat server.pem | base64

Client Certificates (For MTLS)

Client certificates are required in addition to server-side certificates (Self-Signed or Trusted) when you need mutual TLS (MTLS). They enable the proxy to authenticate itself to the upstream server, completing the mutual authentication setup.

When to use: Use client certificates when the upstream server requires client certificate authentication for MTLS communication. This applies to both self-signed and trusted certificate setups.

What you need:

- client.key: The private key for the client certificate.

- client.crt: The client certificate signed by a CA trusted by the upstream server.

- Plus: Server-side certificates configured using either Self-Signed Certificates (CA or Server Certificates) OR Trusted Certificates.

Prerequisites:

- Configure server-side TLS first using one of the approaches above (Self-Signed or Trusted Certificates).

- Ensure the upstream server is configured to require and validate client certificates.

- The CA that signed

client.crtmust be trusted by the upstream server.

Configuration Steps:

-

Combine your client key and certificate into a single PEM file:

cat client.key client.crt > client.pem -

Base64-encode the combined file and add it to the

CLIENT_CERTIFICATESenvironment variable:cat client.pem | base64

How it works:

- Proxy ↔ Target Application: The proxy uses server-side certificates (CA, Server, or Trusted) for the TLS handshake.

- Proxy ↔ Upstream Server: The proxy presents client certificates when connecting to the upstream server.

- Result: Mutual authentication (MTLS) is established, where both the proxy and the upstream server verify each other's identity.

Quick Reference:

For TLS (one-way authentication):

- Choose Self-Signed Certificates:

- CA Certificates for dynamic certificate generation with multiple domains

- Server Certificates for pre-generated certificates with fixed domains

- OR choose Trusted Certificates for simplified setup with a trusted CA

For MTLS (mutual authentication):

- Configure server-side certificates using one of the TLS options above

- Add Client Certificates to enable mutual authentication with the upstream server

Advanced fault tunables

PROXY_PORT: Port where the proxy listens for requests and responses.SERVICE_DIRECTION: Direction of the flow of control, eitheringressoregress.DATA_DIRECTION: API payload type, request, or response. It supportsrequest,response, andbothvalues.NETWORK_INTERFACE: Network interface used for the proxy.

The following YAML snippet illustrates the use of this environment variable:

# it injects the api latency fault

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: engine-nginx

spec:

engineState: "active"

annotationCheck: "false"

appinfo:

appns: "default"

applabel: "app=nginx"

appkind: "deployment"

chaosServiceAccount: litmus-admin

experiments:

- name: pod-api-latency

spec:

components:

env:

# provide the proxy port

- name: PROXY_PORT

value: '20000'

# provide the connection type

- name: SERVICE_DIRECTION

value: 'ingress'

# provide the payload type

- name: DATA_DIRECTION

value: 'both'

# provide the network interface

- name: NETWORK_INTERFACE

value: 'eth0'

# provide the api path filter

- name: PATH_FILTER

value: '/status'

# provide the port of the targeted service

- name: TARGET_SERVICE_PORT

value: "80"

Advanced filters

HEADERS_FILTERS: The HTTP request headers filters, that accept multiple comma-separated headers in the format ofkey1:value1,key2:value2.METHODS: The HTTP request method type filters, that accept comma-separated HTTP methods in upper case, that is,GET,POST.QUERY_PARAMS: The HTTP request query parameters filter, accepts multiple comma-separated query parameters in the format ofparam1:value1,param2:value2.SOURCE_HOSTS: Comma-separated source host names filters, indicating the origin of the HTTP request. This is relevant to theingresstype, specified bySERVICE_DIRECTIONenvironment variable.SOURCE_IPS: Comma-separated source IPs filters, indicating the origin of the HTTP request. This is specifically relevant to theingresstype, specified bySERVICE_DIRECTIONenvironment variable.DESTINATION_HOSTS: Comma-separated destination host names filters, indicating the hosts on which you call the API. This specification applies exclusively to theegresstype, specified bySERVICE_DIRECTIONenvironment variable.DESTINATION_IPS: Comma-separated destination IPs filters, indicating the hosts on which you call the API. This specification applies exclusively to theegresstype, specified bySERVICE_DIRECTIONenvironment variable.TRANSACTION_PERCENTAGE: The percentage of API requests to be affected, with supported values in the range (0, 100]. If not specified, it targets all requests.

The following YAML snippet illustrates the use of this environment variable:

# it injects the api latency fault

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: engine-nginx

spec:

engineState: "active"

annotationCheck: "false"

appinfo:

appns: "default"

applabel: "app=nginx"

appkind: "deployment"

chaosServiceAccount: litmus-admin

experiments:

- name: pod-api-latency

spec:

components:

env:

# provide the headers filters

- name: HEADERS_FILTERS

value: 'key1:value1,key2:value2'

# provide the methods filters

- name: METHODS

value: 'GET,POST'

# provide the query params filters

- name: QUERY_PARAMS

value: 'param1:value1,param2:value2'

# provide the source hosts filters

- name: SOURCE_HOSTS

value: 'host1,host2'

# provide the source ips filters

- name: SOURCE_IPS

value: 'ip1,ip2'

# provide the transaction percentage within (0,100] range

# for example, it will affect 50% of the total requests

- name: TRANSACTION_PERCENTAGE

value: '50'

# provide the connection type

- name: SERVICE_DIRECTION

value: 'ingress'

# provide the port of the targeted service

- name: TARGET_SERVICE_PORT

value: "80"

Container runtime and socket path

The CONTAINER_RUNTIME and SOCKET_PATH environment variables to set the container runtime and socket file path, respectively.

CONTAINER_RUNTIME: It supportsdocker,containerd, andcrioruntimes. The default value iscontainerd.SOCKET_PATH: It contains path of containerd socket file by default(/run/containerd/containerd.sock). Fordocker, specify path as/var/run/docker.sock. Forcrio, specify path as/var/run/crio/crio.sock.

The following YAML snippet illustrates the use of these environment variables:

## provide the container runtime and socket file path

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: engine-nginx

spec:

engineState: "active"

annotationCheck: "false"

appinfo:

appns: "default"

applabel: "app=nginx"

appkind: "deployment"

chaosServiceAccount: litmus-admin

experiments:

- name: pod-api-latency

spec:

components:

env:

# runtime for the container

# supports docker, containerd, crio

- name: CONTAINER_RUNTIME

value: "containerd"

# path of the socket file

- name: SOCKET_PATH

value: "/run/containerd/containerd.sock"

# provide the port of the targeted service

- name: TARGET_SERVICE_PORT

value: "80"

- name: PATH_FILTER

value: '/status'